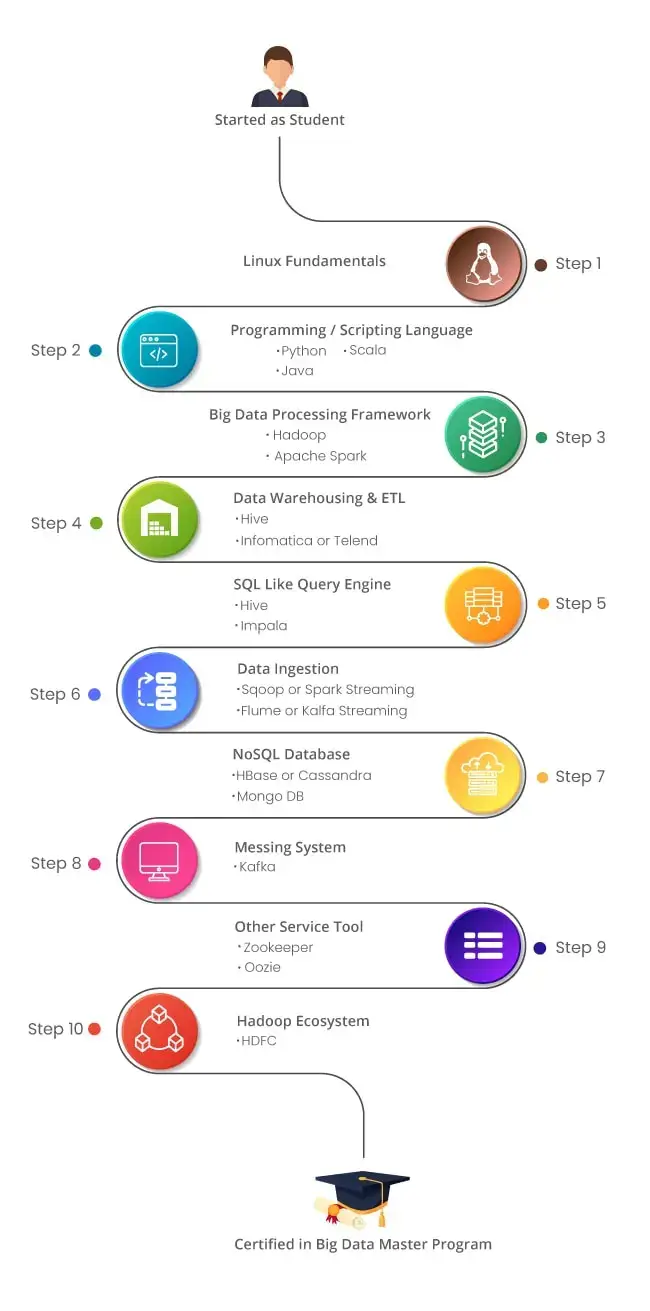

Big Data Masters Program Syllabus

Java Essentials

Apache Spark is created for in-memory computing for lightning speed processing of applications. Apache Spark is basically a processing engine built with the objective of quicker processing, ease of use and better analytics.

Module 1: Getting Familiar with Spark

- Apache Spark in Big Data Landscape and purpose of Spark

- Apache Spark vs. Apache MapReduce

- Components of Spark Stack

- Downloading and installing Spark

- Launch Spark

Module 2: Working with Resilient Distributed Dataset (RDD)

- Transformations and Actions in RDD

- Loading and Saving Data in RDD

- Key-Value Pair RDD

- MapReduce and Pair RDD Operations

- Playing with Sequence Files

- Using Partitioner and its impact on performance improvement

Module 3: Spark Application Programming

- Master SparkContext

- Initialize Spark with Java

- Create and Run Real-time Project with Spark

- Pass functions to Spark

- Submit Spark applications to the cluster

Module 4:

- Spark Libraries

Module 5: Spark configuration, monitoring, and tuning

- Understand various components of Spark cluster

- Configure Spark to modify

- Spark properties

- environmental variables

- logging properties

- Visualizing Jobs and DAGs

- Monitor Spark using the web UIs, metrics, and external instrumentation

- Understand performance tuning requirements

Module 6: Spark Streaming

- Understanding the Streaming Architecture – DStreams and RDD batches

- Receivers

- Common transformations and actions on DStreams

Module 7:

- MLlib and GraphX

SQL

Our SQL Training aims to teach beginners how to use the SQL in RDBMS. SQL Training provided by real-time corporate experts. SQL Training by top industry professionals and standards are certified by Oracle Corporation.

Oracle SQL Training Syllabus

- Introduction to Oracle Database

- Retrieve Data using the SQL SELECT Statement

- Learn to Restrict and Sort Data

- Usage of Single-Row Functions to Customize Output

- Invoke Conversion Functions and Conditional Expressions

- Aggregate Data Using the Group Functions

- Display Data From Multiple Tables Using Joins

- Use Sub-queries to Solve Queries

- The SET Operators

- Data Manipulation Statements

- Use of DDL Statements to Create and Manage Tables

- Other Schema Objects

- Control User Access

- Management of Schema Objects

- Manage Objects with Data Dictionary Views

- Manipulate Large Data Sets

- Data Management in Different Time Zones

- Retrieve Data Using Sub-queries

- Regular Expression Support

Linux

Fundamentals of Linux

- Overview of all basic commands

- Vim editor modes

- Filesystem hierarchy – Basic topics

- File and directories creation

- Grep

- Filter commands (head,tail,more,less)

- Creating users and groups

- Important files related

- Modifying,deleting users and group

- Linux permissions

- Basic permissions overview

- Software management

- Yellowdog update modifier(yum)

- Yum commands

- Different runlevels

- Services and daemons

Big Data Hadoop

Learn how to use Hadoop from beginner level to advanced techniques which are taught by experienced working professionals. With our Hadoop Training, you’ll learn concepts in expert level with practical manner.

- Big Data – Challenges & Opportunities

- Installation and Setup of Hadoop Cluster

- Mastering HDFS (Hadoop Distributed File System)

- MapReduce Hands-on using JAVA

- Big Data Analytics using Pig and Hive

- HBase and Hive Integration

- Understanding of ZooKeeper

- YARN Architecture

- Understanding Hadoop framework

- Linux Essentials for Hadoop

- Mastering MapReduce

- Using Java, Pig, and Hive

- Mastering HBase

- Data loading using Sqoop and Flume

- Workflow Scheduler Using OoZie

- Hands-on Real-time Project

Apache Spark & Scala

Apache Spark is created for in-memory computing for lightning speed processing of applications. Apache Spark is basically a processing engine built with the objective of quicker processing, ease of use and better analytics.

Module 1: Getting Familiar with Spark

- Apache Spark in Big Data Landscape and purpose of Spark

- Apache Spark vs. Apache MapReduce

- Components of Spark Stack

- Downloading and installing Spark

- Launch Spark

Module 2: Working with Resilient Distributed Dataset (RDD)

- Transformations and Actions in RDD

- Loading and Saving Data in RDD

- Key-Value Pair RDD

- MapReduce and Pair RDD Operations

- Playing with Sequence Files

- Using Partitioner and its impact on performance improvement

Module 3: Spark Application Programming

- Master SparkContext

- Initialize Spark with Java

- Create and Run Real-time Project with Spark

- Pass functions to Spark

- Submit Spark applications to the cluster

Module 4:

- Spark Libraries

Module 5: Spark configuration, monitoring, and tuning

- Understand various components of Spark cluster

- Configure Spark to modify

- Spark properties

- environmental variables

- logging properties

- Visualizing Jobs and DAGs

- Monitor Spark using the web UIs, metrics, and external instrumentation

- Understand performance tuning requirements

Module 6: Spark Streaming

- Understanding the Streaming Architecture – DStreams and RDD batches

- Receivers

- Common transformations and actions on DStreams

Module 7:

- MLlib and GraphX